Reading Time:

10 minutes

Current AI research leads to extinction by godlike AI

A mechanistic understanding of intelligence implies that creating AGI depends not on equipping it with some ineffable missing component of intelligence, but simply on enabling it to perform the intellectual tasks that humans can.

Once an AI can do that, the research path that AI companies and researchers are currently pursuing leads to godlike AI — systems that are so beyond the reach of humanity that they are better described as gods and pose the risk of human extinction.

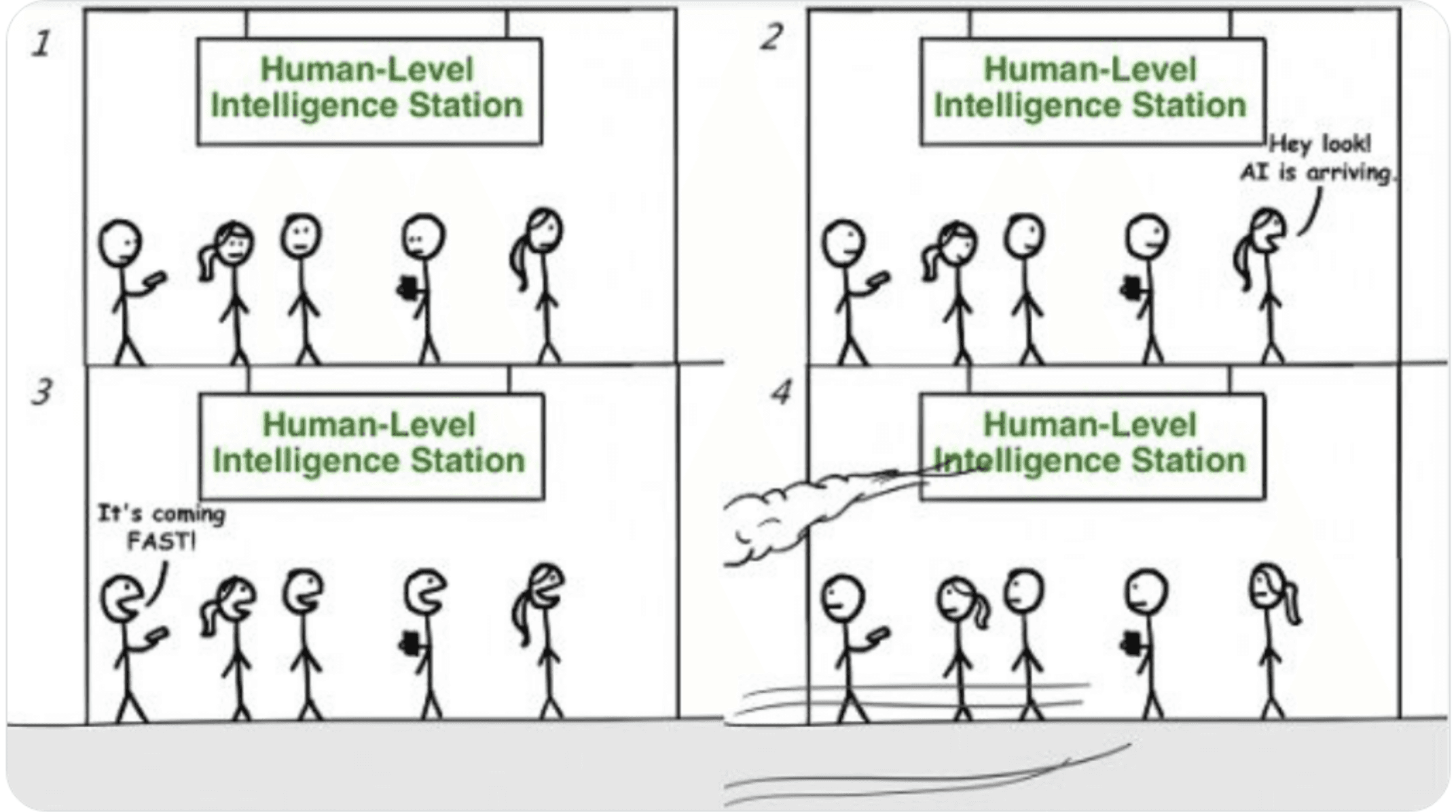

More granularly, we expect AI development to progress as follows:

Current AI research will lead to AGI, intelligence that operates at the human level but benefits from the advantages of hardware.

AGI will lead to artificial superintelligence (ASI), intelligence that exceeds humanity’s current capabilities.

ASI will lead to godlike AI, intelligence that is so powerful that it outclasses humanity in ways impossible to compete with.

To avoid catastrophe requires first being clear on the risks, which depends crucially on understanding the feasibility of creating godlike AI and its potential capabilities and dangers, as well as why the current research path will lead to it.

We define AGI as an AI system that can perform any intellectual task that a human can do on a computer.

Current AI research leads to AGI because any such intellectual task can be automated. Multimodal LLMs can already manage concrete physical tasks such as typing, moving a cursor, and making sense of what is displayed. More complex tasks such as navigating the web, like Anthropic’s latest Claude model can, are in fact only a series of smaller intellectual tasks arranged to achieve some goal. Once an AI can fully interface with a computer and perform the intellectual processes humans can, it is AGI.

Building AGI is not a matter of “if,” but “when”; we merely need to determine how to automate the intellectual tasks that humans currently perform, which we showed in the previous section to be a mechanistic process. AGI will be powerful, but its constituent parts will be mundane:

Some procedural tasks such as spell-checking, calculating a budget, and mapping a path between two locations, are simple to program, and already feasible using traditional software.

More complex and informally specified tasks, like image recognition, creative writing, and game play, require intuitive judgment. We have failed to formalize these intuitions with traditional software, but they are already addressed by deep learning.

Tasks such as driving, designing a plane, and running a company remain out of reach for today’s AI, but they are decomposable into subtasks that are either already solved or on track for automation.

Without a doubt, current research is actively striving to achieve AGI. OpenAI CEO Sam Alman wrote in a recent blog post that

"AGI has the potential to give everyone incredible new capabilities; we can imagine a world where all of us have access to help with almost any cognitive task, providing a great force multiplier for human ingenuity and creativity."

Similarly, Anthropic’s “Core Views on AI Safety” states that

"most or all knowledge work may be automatable in the not-too-distant future – this will have profound implications for society, and will also likely change the rate of progress of other technologies as well."

It is misleading to suggest that AGI will empower humans — automating the most complex intellectual tasks (such as designing a plane or running a company) requires increasingly autonomous AIs that are capable of dealing with a wide range of problems, adapting in real time, and finding and evaluating alternatives to complex intermediary questions. As this unfolds, AIs systems will look less like chatbots and more like independent agents solving complex problems by themselves.

Recent releases already confirm this trend, with AI agents running autonomously for longer without human feedback. For example, OpenAI’s o1 model is designed to “spend more time thinking through problems before they respond, much like a person would.” Systems can also more easily self-replicate, as evidenced by Anthropic’s Claude calling other instances of itself, described as the ability to “orchestrate multiple fast Claude subagents for granular tasks”. As this path continues, AI research will eventually reach AGI.

Already, AGI could very well spell catastrophe for humanity. In the current climate, companies such as Meta and Mistral AI would soon release open-weight versions of AGI, and open-source projects such as llama.cpp would soon make them available on basic computers that anyone can get. This means that every single criminal in the world would have access to a system that can think better than a human on almost all topics, and help them plan and execute their crimes without getting caught. Although law-enforcement and governments would also have access to this technology, the situation is heavily asymmetric: it takes only one successful malevolent actor to create a massive problem. This would force governments and law-enforcements race as fast as possible to get more powerful AIs and defer to it, pushing us further on the path of more autonomous and powerful AIs.

AGI will lead to ASI because it is capable of self-improvement.

AI is software that is programmed by humans using computers. Because AGI can perform any intellectual task, it follows that AGI can perform the same research and programming that humans can in order to extend the capabilities of AI, and in turn apply its findings to itself.

Current AIs already perform these basic examples of self-improvement, and researchers explicitly aim for stronger methods, from training current AIs to program, to generating data to build more powerful AIs, to iterative alignment.

These are techniques that have all been used today, but AGI could go further still, discovering new techniques for self-improvement and performing tasks that human researchers have not.

The current rate of AI improvement attributable to algorithmic progress implies huge room for software improvements, which means that just around the time when AI reaches human-level at improving AI algorithms, things will speed up significantly, and will only move faster after this.

It is a mistake to assume that this automation will continue to produce mere tools for human AI researchers to use. Current research is solely focused on enhancing AI and making it more autonomous, not on improving human research understanding. This path does not lead to human AI researchers performing more and more sophisticated work, but to replacing them with autonomous and agentic AI systems which can more efficiently, effectively, and cheaply perform the same tasks.

And as we’ve already seen, this research might even be forced upon governments and nations, as the unregulated spread of open-weight AGI would create an actual arms race between criminals and governments to develop more and more powerful AIs to handle the other’s AIs.

Over time, this self-improvement will lead to artificial superintelligence (ASI), which is defined as “intelligence far surpassing that of the brightest and most gifted human minds.” We contend that superintelligence will surpass the intelligence of all of humanity, not just its star students.

ASI will exceed individual human intelligence

AGI will be superhuman once it is able to automate anything that a human can do because of the intrinsic advantages of digital hardware.

Computers are cheaper and faster to create than humans. Child rearing alone takes 18 years and ~$300,000 in middle-class America. Replicating a system is decidedly faster, and the most powerful GPUs that host AI models reportedly cost approximately $3000.

Computers are more reliable than humans. Humans are fallible – we can be tired, distracted, and unmotivated and require daily nourishment, exercise, and sleep. Computers are tireless, perform exact calculations, and require little maintenance.

Computers are much faster at sequential operations than humans. For example, a graphics card from 2021 is ~34 TFLOPS, meaning it can perform 34 trillion 10-digit multiplications per second. It would take humanity at least five days to perform this calculation even under the generous assumption that all 8 billion humans each solve ~4000 10-digit multiplication problems without interruption or rest.

While multiplication is a simple task, this demonstrates that once any task is automated and optimized, computers will perform it much more efficiently. This holds true for reading, which is how humans learn at scale. The first step in reading and understanding any text is to simply process the words on the page; at a reading speed of 250 words per minute, a human could finish the 47,000-word The Great Gatsby in about three hours. An AI from 2023 was able to both read it and respond to a question about the story in 22 seconds, about 500 times faster.Computers communicate information much faster than humans do. The world's fastest talkers can speak 600 words per minute, and the world’s fastest writers can type 350 words per minute. In comparison, computers can easily download and upload at 1 gigabit per second. Conservatively assuming that an English word is eight characters, this converts to a download/upload speed of 900 million words per minute — one million times faster than human communication.

Computers collect and recall information much faster than humans do. Memorizing a list of 20 words might take a human ~15 minutes using very efficient spaced repetition. A $90 solid-state drive has a read-write speed of 500MB/s. This is about 3.5 billion words per minute, literally billions times faster than any human.

An AGI able to automate any human intellectual task would therefore be strictly superhuman given performance speed. A conservative estimate posits that near-future AI will think 5x faster than humans can, learn 2500x faster, and, if cloned to many copies, perform 1.8 million human years of work in two months; some imagine powerful AI looking like a “country of geniuses in a datacenter.”

ASI will exceed humanity’s intelligence

Just as humanity is more powerful than a single human, millions or billions of AI is more powerful than a single AI. If AGI reaches human-level intelligence and can clone itself, an ever-growing swarm of AIs can quickly rival humanity’s collective intelligence.

Moreover, this swarm can learn faster than the glacial pace at which humanity learns. Plank’s principle is that “scientific change does not occur because individual scientists change their mind, but rather that successive generations of scientists have different views.” While it may take humanity generations to change our minds, AI capable of reprogramming itself can fix its intellectual flaws as fast as it notices them.

Consider that the frontier of human intelligence — our scientific communities — are riddled with methodological and coordination failures. As ASI improves, it could transcend these failure modes and make rapid scientific progress beyond what humanity has achieved.

AIs can fix issues in scientific methods much faster than humans. Human fallibility impedes progress, as evidenced by the replication crisis in many areas of science, which has led to publication bias and HARKing. Potential solutions such as preregistration have been proposed, but in practice scientists do not report failures and present successes as intentional, making it difficult to evaluate scientific literature. These methodological errors may never appear for AI, and if issues arise, they could simply be removed with reprogramming.

AIs can coordinate much more efficiently than humans can. If multiple AIs are collaborating on a problem, it is simple for them to exchange information.

Human-led research is full of conflict over credit and attribution (such as the order of names on a paper, adversarial economic incentives (such as the lack of open access forcing universities to pay for papers written by their own researchers), and gaming metrics of recognition (such as fixation on the h-index, which reflects not the intrinsic quality of someone’s work, but the volume of citations). AI would not need vanity metrics, and could evaluate research based simply on what is most impactful.

The field of metascience has emerged to resolve these challenges, but they remain unsolved. ASI would not be susceptible to these failures, and it would leverage its computational power to comprehensively evaluate large volumes of data and identify complex high-dimensional patterns. The field is already moving in this direction: DeepMind has used AI to automate scientific research in protein folding, chips design, and material science.

To summarize, an AGI capable of performing the same intellectual work that a human can would quickly become superintelligent, cloning itself to reach the intelligence level of humanity, and reprogramming itself to avoid any errors in its understanding. Compared to human intelligence which improves generationally, an AGI can propel itself to the heights of intelligence much faster than humanity ever could.

There are practical limits to the growth of AI intelligence, but we can expect an intelligence that exceeds our own to scale much faster than human intelligence can. And it can easily overcome bottlenecks to improvement, be they hardware or software, institutions or people, simulations or real life experiments.

While we don’t know the limits of intelligence, we do understand the limitations of physical systems. The estimates below describe the delta between our current outputs and theoretical ceilings, from which we can infer the direction of superintelligence’s growth.

Energy will grow. Energy production is bottlenecked by technological progress, which is bottlenecked only by our intelligence and effort. Per mass-energy equivalence, we know that a ton of matter (including rocks) can theoretically power the whole world economy, we just haven’t figured out how to extract this energy. ASI could learn to harvest this energy for its own operation, or even harvest the total energetic output of the Sun, which is trillions of times the world’s current annual power consumption. Although the universe does not offer us infinite energy, we are far from reaching its fundamental limits, and should expect any growing intelligence to demand more and more energy.

Computers will improve. The physical limits of computations and computational complexity theory tell us that hardware and software can still dramatically improve in information density and processing speed, which would result in more efficient computation. We are in the infancy of software engineering — we only recently formally verified small programs, and are nowhere near the limits of proof automation architecture. In theory, it’s possible to build software that never fails, at scale, on recursively more efficient machines and algorithms.

Communication will get faster. We are nearing the theoretical limits of latency (the guaranteed speed of information transfer), with optical fiber communications near the speed of light in a vacuum. However, we are not exercising the full capabilities of bandwidth (the volume of data transferred), which offers another dimension for quickening communication speed. The Shannon-Hartley theorem and Bekenstein bound are two theories that estimate bandwidth limits, but we are far from both. All types of bandwidth have continued to increase by orders of magnitude, so we can expect communication speed to improve.

Energy, computation, and communication are rate-limiting factors for the growth of an intelligence. Since we are nowhere near the bounds on any of these axes, we can assume that ASI can improve along all dimensions and in turn reap compounding benefits.

Other fields, such as engineering, would also benefit from these optimizations. As ASI grows in power, with more energy, better computation, faster communication, and overall greater intelligence, ASI will be able to achieve feats far out of reach of what humanity is capable of.

Mastery of big things. Many concepts from science fiction are engineering problems rather than physical impossibilities, often conceptualized by scientifically-educated authors. We first produced graphene, the strongest material, only 20 years ago, and it is a billion times weaker than the strongest theoretical materials. As engineering sciences improve and ASI marshals more energy, we should expect megascale and astroscale feats. Ambitious projects such as space travel, space elevators, and dyson spheres may be within reach.

Mastery of small things. At the scale of the nanometer, we are already building incredibly precise and complex systems, despite the difficulties posed by many quantum effects. Transistors are now measured in nanometers, thousands of times smaller than they were decades ago, enabling massive compute progress that has driven the recent AI boom. Microbiology shows that complex specialized molecular machines, such as ribosomes, are possible, and that more advanced techniques beyond CRISPR might make it possible to rebuild life. ASI could master the recombination of DNA and atoms, or build micro-scale and milli-scale machinery.

Mastery of digital things. The digital world is trivial to manipulate and scale — we can easily alter underlying programs and media and clone them. Extrapolating from what is already digitized, we can imagine a Matrix-like scenario. When it is technologically possible to manipulate perceptual signals directly to the brain, actual physical experience becomes more expensive than simulation. At this point, it could be possible to simulate reality.

ASI that can harness the power of the sun, compute at incomprehensible speeds, build atomic machines, and digitize reality is a god compared to humanity. Without intervention, artificial intelligence will reach this level of sophistication and power, and then go even further.

The last few subsections unfolded the expected next steps of our current trajectory, where AIs consistently become more autonomous and powerful. More autonomous because their makers and users are already delegating more and more of the decisions and planning to them. And more powerful because of the relentless push of self-improvement catapulting AIs past human – and humanity’s – intelligence, unlocking scientific and technological powers at the level of gods.

The end result, if we don’t get wiped out before, is a world where AIs, not humans, are in control. Everything, from the economy to supply chains and education, would have been delegated to autonomous AIs who can just do anything better than a person, cheaper, faster. These AIs wouldn’t be simply tools, because after so many decisions and prioritizations delegated to them, they would be running the show.

This spells disaster for humanity because by default we will fail to ensure these godlike-AIs do exactly what we want (as argued in the next section), and this misalignment between what they aim for and what we want systematically leads to catastrophe for humanity.

For the moment, let’s just assume that we will indeed fail to make godlike-AIs do our biddings, and that they will have distinct goals from those of humanity – the next section defends this claim in detail. Even with this assumption, why would these godlike-beings wipe us out? They might not be controlled by us or do our bidding, but that doesn’t mean that they would necessarily hate us or plan our doom, right?

The missing piece here is that so many of the things we need and value are simply in the way of whatever godlike-AIs might aim for, and that they would have unlocked the power to just impose their might. AIs don’t need plants, they don’t need food, they don’t need oxygen, they certainly don’t need human beings to be thriving, healthy, or happy; all of these are things at best to be exploited, at worst to be ignored.

Worse, these examples don’t even mention the many ways in which humans could be a slight annoyance, a thorn in the side of godlike-AIs, which would then give them reasons to deal with the humans.

When imagining this future, the picture to have in mind is not of some Greek gods who live in the clouds and mostly do their own things, sometimes interfering with human affairs; it’s of a world owned and shaped by godlike-AIs, where we are ants scuttling on the ground. Contractors working on a house don’t hate ants, yet their very work kills a tremendous amount of ants, wrecks their infrastructure, and destroys what they (literally) live for. And when we find ants in our kitchen or our bathrooms, we almost instantly turn to poison or pest control to handle the slight annoyance.

Unaligned godlike-AIs won’t destroy us out of guile, but out of indifference. We would just be in the way of any ambitious goal that they might have, and if they’re not aligned with us, they simply won’t have any reason to care. And if we fight back… well, did ants ever succeed at bringing down humans?

The obvious next question is: why would godlike-AI not be under our control, not follow our goals, not care about humanity? Why would we get that wrong in making them? Already, the fact that we grow our AIs instead of building them hints at the answer. But let’s fully address it in the next section, arguing that this problem – dubbed alignment – is by far the hardest question that humanity has ever encountered, that we’re not remotely close to solving it, and worse, that as a civilization we’re not even trying to solve it.